Qwen25 Omni Redefining Local Multimodal AI Performance

Qwen2.5-Omni: The Next Evolution in Local Multimodal AI

The AI world just took a major leap forward. In a groundbreaking release, Alibaba’s Qwen team has open-sourced Qwen2.5-Omni, a robust multimodal large language model that supports processing and generation across text, audio, images, and video — all running locally on your machine.

If you’ve been gravitating toward models like OpenAI’s ChatGPT or Google’s Gemini, it’s time to pay close attention: Qwen2.5-Omni outperforms Gemini 1.5 Pro in several multimodal reasoning benchmarks.

What Makes Qwen2.5-Omni a Game-Changer?

Unlike many advanced AI models that require access to remote servers, Qwen2.5-Omni is designed for full local deployment. This means heightened privacy, speed, and flexibility without cloud dependencies.

Let’s break down the biggest innovations:

- Multimodal Input: Simultaneously processes text, speech, images, and videos.

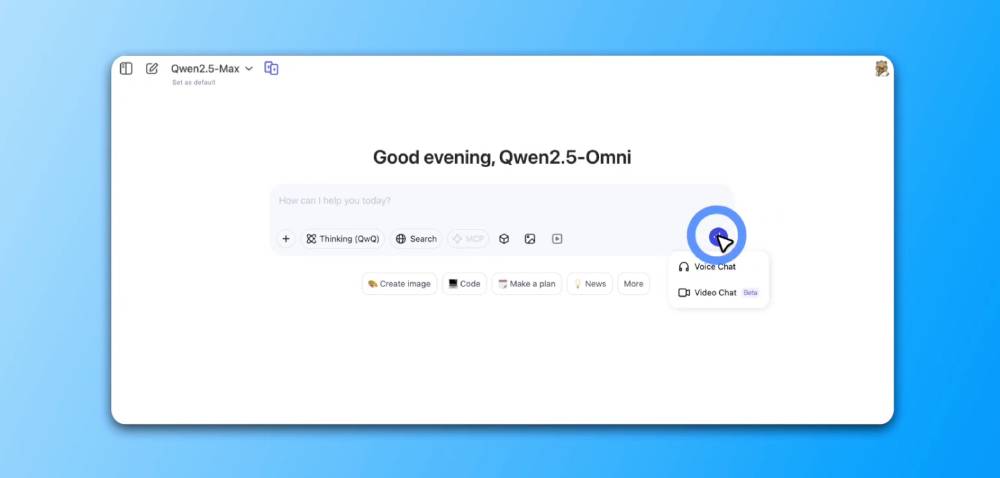

- Voice & Video Chat: Enables local real-time conversation across modalities (yes, you can talk to it like Siri or Alexa, but on your device).

- Thinker-Talker Architecture: Separates core reasoning (Thinker) from speech generation (Talker), enhancing modularity without sacrificing performance.

- TMRoPE Synchronization: Synchronizes timestamps across modalities to process coherent interactions, especially in audio-video input scenarios.

Here’s a closer look at how the model’s architecture works:

| Component | Role |

|---|---|

| Thinker | Handles text understanding, reasoning, and generation. |

| Talker | Produces natural-sounding speech and interprets voice commands. |

Both components are jointly trained to maintain shared context and continuity—allowing more accurate and natural responses.

Real-Time, Streamlined, and Local

One of the truly remarkable features is Qwen2.5-Omni’s real-time, low-latency performance. Thanks to block-wise streaming input and efficient memory handling strategies, the model can process large contexts on-device.

Streaming capabilities mean:

- Reacts to new input on the fly (ideal for voice assistants or video analysis)

- Efficient handling of long video/audio files

Benchmark Performance: A Model That Dominates

Qwen2.5-Omni isn’t just flashy tech; it delivers results that go toe-to-toe with top-tier models. Check out the benchmark scores below:

| Benchmark | Score | Comparison |

|---|---|---|

| OmniBench – Multimodal Reasoning | 56.1 | Beats Gemini 1.5 Pro (Score: 42.9) |

| MMAU – Audio Understanding | 65.6 | Beats Qwen2-Audio (49.2) |

| MVBench – Video Understanding | 70.3 | Outperforms Qwen2.5-VL (69.6) |

| Seed-TTS-Eval – Speech Naturalness | 93.5 | On par with human scores (93.2) |

| NMOS+ – Speech Quality (Mean Opinion Score) | 4.51 | On par with human-generated speech |

Where to Access Qwen2.5-Omni

Ready to try it out? The model and everything you need is open and freely available:

License: Apache 2.0 (commercial use allowed)

Bonus: Comes with full inference tools and documentation for local execution!

Community Buzz & Commentary

Here’s what leading developers had to say:

Niels Rogge: “Not only did they release the 7B model behind Qwen Chat’s voice mode with an Apache 2.0 license, they also integrated it into Transformers from day one.”

Dr. Daniel Bender: “The memory requirements for processing video locally are heavy: 60+ GB for a 1 min clip using BF16. This sets a new bar for local inference capability.”

Leonardo Silva: “When will a dedicated app for Qwen AI be available? This is the future of multimodal interfaces!”

What’s Next in Multimodal AI?

While giants like Google and OpenAI push forward, the open-source ecosystem has found its next champion in Qwen2.5-Omni. Whether you’re an AI researcher, indie developer, or simply passionate about the next phase of intelligent assistants—this is a model worth exploring today.

Want to start building? Access guides and try it live from the official Qwen launch page.

Want to Stay Ahead?

Sign up for more updates like this via the AlphaSignal newsletter – it’s the go-to source for top 1% news in AI: Sign up here.

🚀 Hashtags:

#Qwen2_5Omni #OpenSourceAI #MultimodalAI #VoiceAI #VideoAI #RealTimeAI #LLM #AIchatbots #SpeechSynthesis #LocalAI #ApacheLicense #ThinkerTalker #ArtificialIntelligence #TechNews